Nvidia (short for “NVidia Corporation”) is a leading American technology company best known for designing graphics processing units (GPUs) and advanced computing platforms used in gaming, professional visualization, high-performance computing, and artificial intelligence (AI) systems. It was co-founded in 1993 by Jensen Huang, Chris Malachowsky, and Curtis Priem, with Jensen Huang serving as CEO and playing a pivotal role in steering the company from graphics chips into AI-driven computing dominance. Wikipedia+1

Today, Nvidia’s products power a wide range of customer needs, including GeForce GPUs for PC gaming, professional GPUs for content creation and scientific research, AI and data center accelerators for cloud and enterprise computing, autonomous vehicle platforms, and gaming and multimedia devices. By developing both hardware (like Blackwell AI chips) and software ecosystems (including CUDA for parallel computing), Nvidia supports customers from gamers and creative professionals to AI researchers and large tech companies. Wikipedia

In 2025, Nvidia reached remarkable financial milestones. The company reported record annual revenue of about $130.5 billion in fiscal 2025, more than doubling year-on-year, driven largely by data center and AI hardware demand. Nvidia also achieved an unprecedented market valuation of about $5 trillion, making it one of the most valuable companies in the world and highlighting its central role in the global AI ecosystem. NVIDIA Newsroom+1

Did Nvidia Only Make GPUs? Why They Entered the AI Chip Market

🧠 1. Nvidia Didn’t Start With Only GPUs

Nvidia first became famous for graphics processing units (GPUs)—chips designed to handle the intense math needed for video rendering and 3D graphics in games. Over time, Nvidia’s GPUs also became essential in professional visualization, scientific computing, data centers, and machine learning, because:

- GPUs can perform many calculations in parallel

- This makes them perfect for AI training and inference

- Nvidia developed software like CUDA, attracting developers to its ecosystem

So while Nvidia is best known for GPUs, its chips have always been used beyond gaming.

🧠 2. Why Nvidia Decided to Make AI Chips

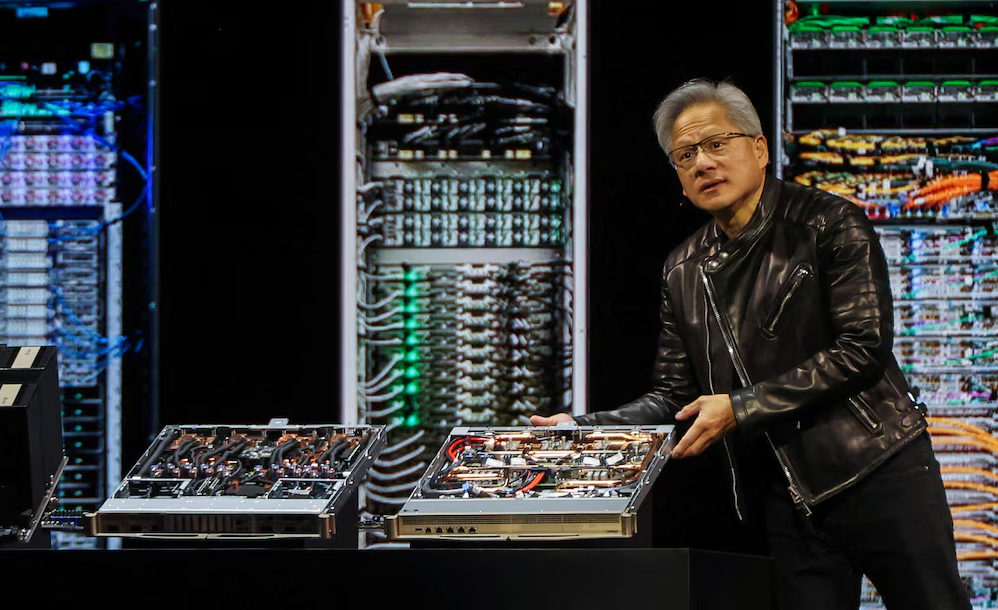

Nvidia’s move into dedicated AI chips (like the Hopper, Blackwell, and GH100/Grace series) was not random—it was a strategic evolution based on several reasons:

• AI Workloads Need More Efficiency

GPUs are good general accelerators, but AI tasks (training large models, inference at scale) benefit from specialized architecture optimized for matrix math, lower precision formats, and high bandwidth.

• Explosive Growth of AI Demand

Emerging AI applications—from large language models to autonomous vehicles and cloud inference—require specialized hardware that can scale more efficiently than traditional GPUs.

• Data Center & Cloud Acceleration Market

The fastest growth segment in chip markets is AI-optimized data center chips, not PCs or gaming alone. Nvidia recognized this early and invested heavily in AI silicon, software, and platforms.

🧠 3. Does Nvidia “Down GPU Production” to Build AI Chips?

It’s not exactly true that Nvidia simply “stopped making GPUs.” What happened is more nuanced:

• Nvidia Still Produces GPUs

- Gaming GPUs (GeForce) continue to sell strongly.

- Professional GPUs (Quadro/RTX) remain core products.

- Data center GPU accelerators are a huge revenue driver.

• Production Shifts With Market Demand

GPU production and shipments depend on demand signals. If gaming GPU demand slows temporarily (due to inventory cycles, macro conditions, pricing, etc.), Nvidia may rebalance fab orders or prioritize AI-heavy silicon without fully cutting GPU production.

• AI Chips Are Not Replacements

AI accelerators like H100, GH200/Blackwell, Grace are complementary to Nvidia’s GPU lineup. They serve higher-end, large-scale AI workloads that traditional GPUs cannot optimize as efficiently.

🧠 4. Why Nvidia’s AI Strategy Matters

Nvidia’s AI chips are part of:

• A Larger Software + Hardware Ecosystem

Platforms like CUDA, cuDNN, TensorRT, DGX systems make Nvidia’s silicon work seamlessly with developer tools and cloud infrastructure.

• Cloud & Enterprise Growth

AI hardware is now a major revenue driver, supported by partnerships with hyperscalers (AWS, Microsoft Azure, Google Cloud) and private AI clusters.

• Long-Term Strategic Positioning

By leading in AI silicon, Nvidia:

- Moves beyond the cyclical nature of consumer GPU markets

- Taps into long-term enterprise and AI compute demand

- Competes with other AI ASICs (Graphcore, Cerebras), CPUs, and custom silicon

📊 Before & After: NVIDIA GPU vs AI Chip Production (Illustrative Timeline)

| Year / Period | Primary Focus | Major Chips | Production & Demand |

|---|---|---|---|

| 2020 | GPU for compute & AI beginnings | A100 (Ampere) | GPUs start being used in AI data centers (AI adoption begins) Wikipedia |

| 2021 | GPU for gaming + data center | A100 continued | Strong data center demand for AI compute Wikipedia |

| 2022 | AI acceleration surge | H100 (Hopper) | First high-demand AI GPU; severe backlogs due to demand NVIDIA Newsroom |

| 2023–2024 | Hybrid GPU + early AI focus | H200, GB200 (Blackwell incoming) | Production ramp ups; AI GPUs start high volume shipment projections CRN+1 |

| 2025 | AI-centric releases & expanded AI line | Blackwell & successors | Shift toward more AI chips with Blackwell platform; planned strong ramp CRN |

| 2026–2027 | Next generation compute | Vera Rubin, Rubin Ultra, future platforms | Continued shift to specialized AI / CPU+GPU combos Wikipedia |

Leave a Reply